If you ever bought a house and had to deal with bankers, brokers, realtors, contractors, movers, utilities, inspectors, insurance agents, lawyers, and sundry government agencies, you realize this is kind of how it is.

But let's deal with a different type of failure. Powershell uses ErrorAction to define how a script responds to errors. This can be defined globally by assigning a value to the $ErrorActionPreference variable. You can override default behavior for a specific cmdlet by using the -ErrorAction common parameter. Possible values are Stop, Inquire, Continue, and SilentlyContinue. These are explained in the snippet from help about_preference_variables.

Note that the default ErrorAction is actually Continue, not SilentlyContinue as stated in the help. You can see examples of these settings by issuing the help about_preference_variables and help about_commonparameters commands. As I debug scripts I set the $ErrorActionPreference to Inquire or Continue. But once I put error handling in place I set it to SilentlyContinue.

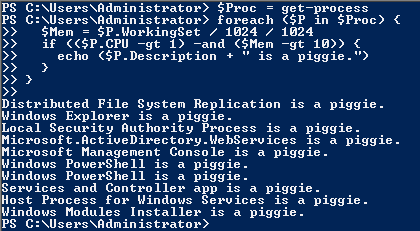

What can I do to evaluate errors? One way is to check the value of the variable $?. This is set to $True if the previous command completed successfully and $False if it failed. The variable $LastExitCode has the value of the exit code for the most recently executed command. These variables give me all the power I need to simulate a DOS batch file, if that's what you need to do.

But wait, there's more!

If an error is raised the information about it gets added to the $Error variable. $Error can be referenced like an array but it is actually a circular buffer with a default length of 256. Once your script generates the 257th error the first error gets deleted to make room for the new error. If you really want to keep more more than 256 errors you can set the $MaximumErrorCount variable. The most recent error is referenced as $Error[0]. The $Error buffer can be cleared by using the $Error.Clear() method. So now I have all the power I need to simulate a vbScript, not that there's anything wrong with that.

But wait, there's more!

PowerShell lets us create a trap. This is a block of code that gets executed whenever an error is raised so you don't have to check for errors at each critical step. If you need to raise an error use the Throw command. Use the Continue command in the trap block to keep processing the rest of the script or use the Break command to exit the current block of code.

You can specify different traps for different types of exceptions. For example you might have one trap that deals with file system errors and another that deals with network errors. The powershell.com guys do a good job of explaining this and other intricacies. Joel Bennet explores other anomalies.

But wait, there's more!

With PowerShell 2.0 and beyond we have the try-catch-finally construct used in modern object oriented languages. This not only gives you a sweet way to methodically handle errors but also lets you break the script into logical chunks and use the Write-Progress cmdlet to show users how close we are to completion.

And like the Trap, you can have different Catch blocks for different types of errors. In the first section of the previous example where I read the data, I could have one catch block if the file is not found, another catch block if I can't read the file, and another as a catch all catch to catch whatever else I didn't catch:

Try {

# Read the data

}

Catch [System.IO.FileNotFoundException] {

echo "The file wasn't found"

break

}

Catch [System.IO.IOException] {

echo "The file could not be opened"

break

}

Catch {

echo "An unexpected error happened. Check the Mayan calendar."

break

}

# Read the data

}

Catch [System.IO.FileNotFoundException] {

echo "The file wasn't found"

break

}

Catch [System.IO.IOException] {

echo "The file could not be opened"

break

}

Catch {

echo "An unexpected error happened. Check the Mayan calendar."

break

}

As usual, most of these examples are stupid. If all you do is tell the user that an error happened you don't need any of these facilities. Just let PowerShell show the default error message and take the appropriate ErrorAction. The real power in error handling is to take corrective action: prompt the user for a file that exists, fix the input so it has the correct format, reset a failed network connection, cast the ring of power into the fires of Mount Doom, etc.

Remember, failure is a natural and happens all the time. Recovering by turning failure into success is what error handling is all about. PowerShell has abundant tools to help you achieve that goal.